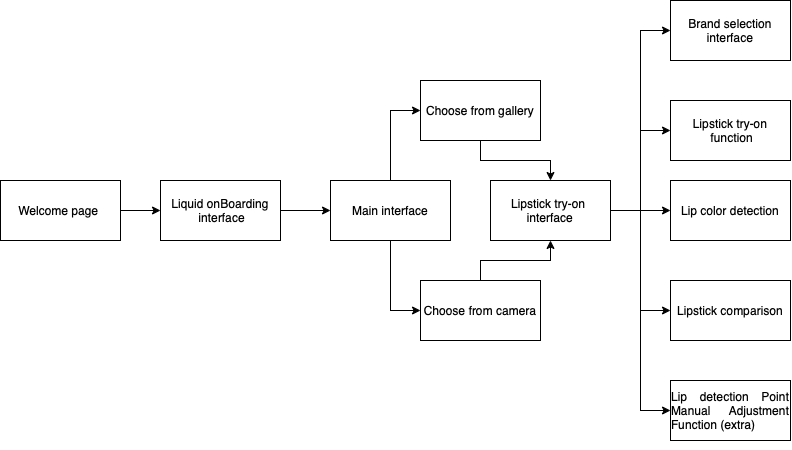

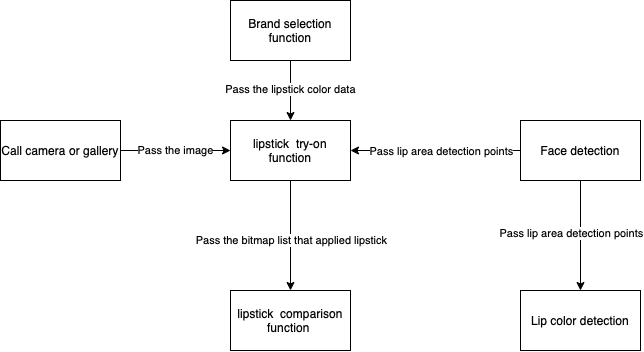

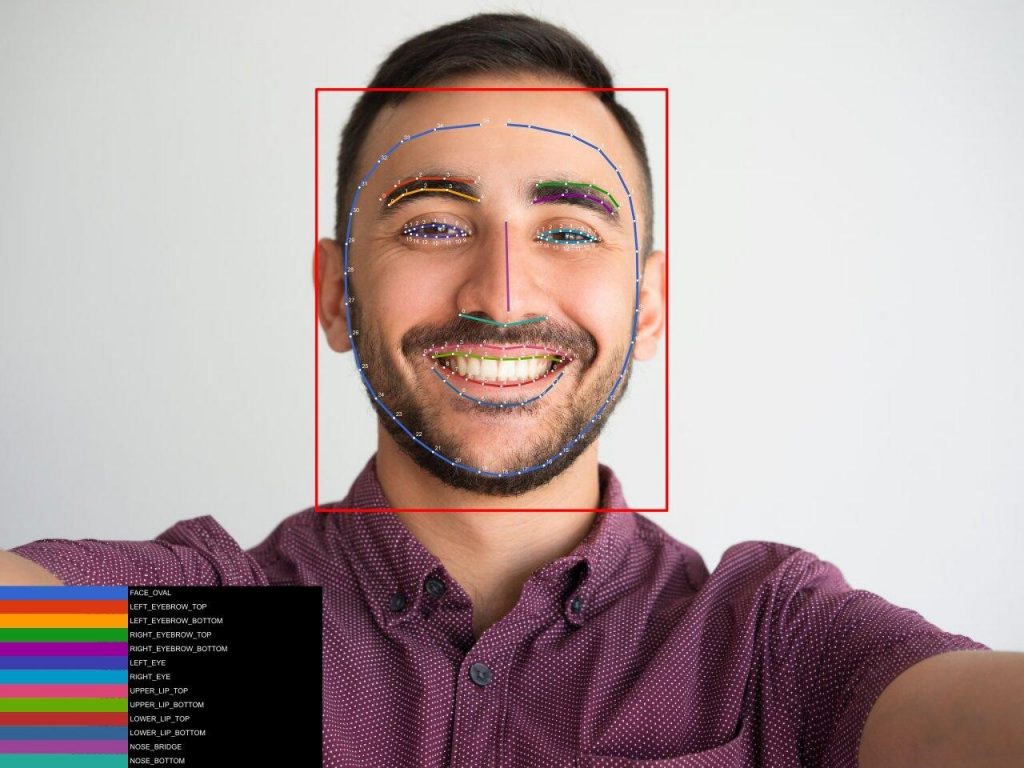

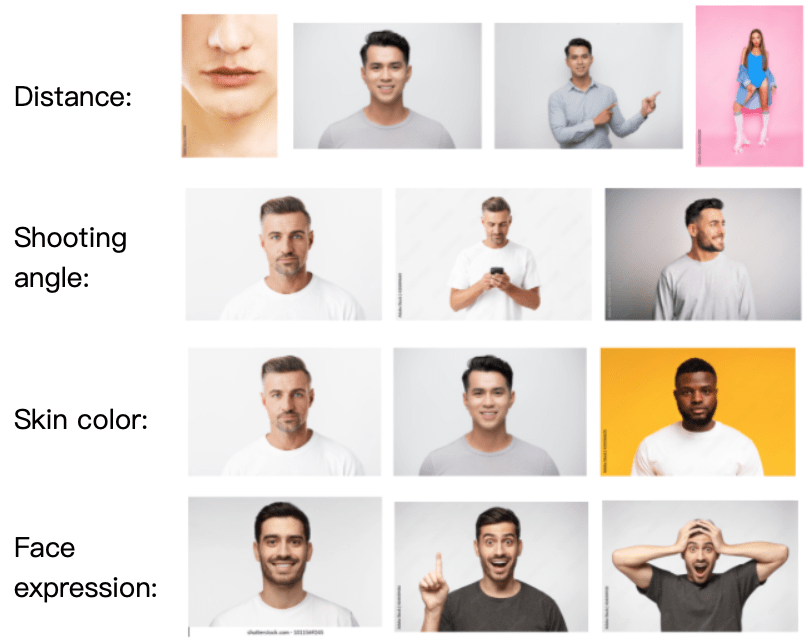

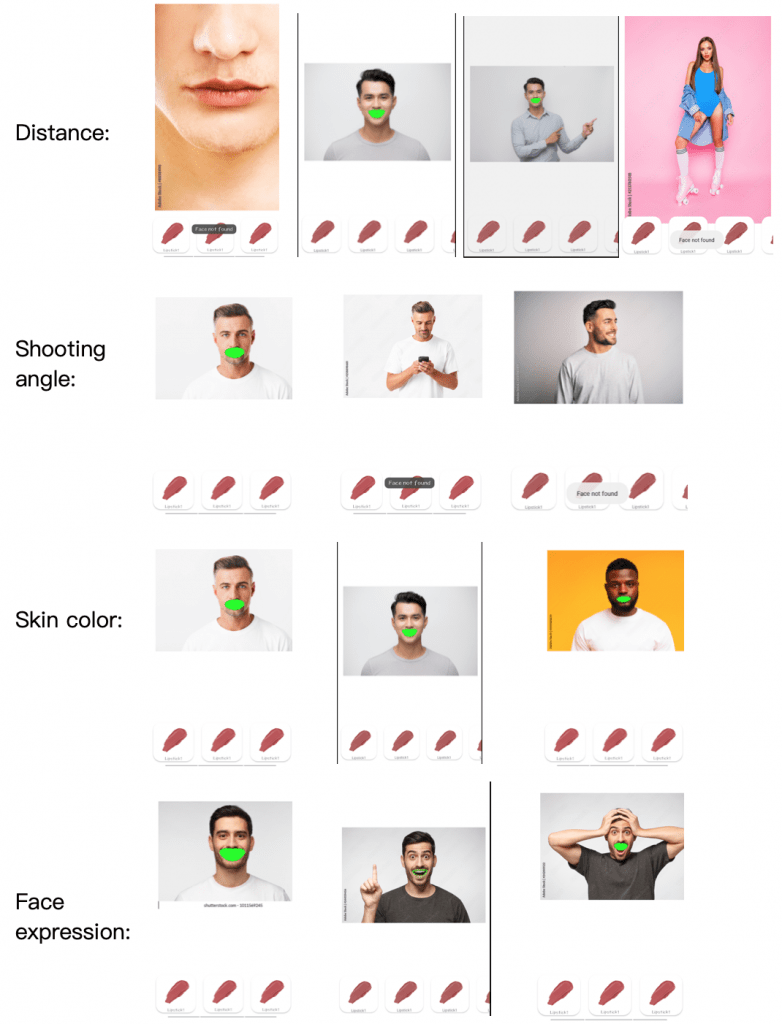

My first test was done after the face detection coding was completed. I tested around 30 images covering all 4 different factors and also the environment factor. Also, I used Canvas to draw the detection points to better show the detection results. The sample image groups are still used as an example of the results. The results are shown in figure 11. As you can see, the test results are divided into 3 different cases: face not found, face detect inaccurately, and face detect correctly. If the lip image can not be detected, there will be a text showing “face bot found” on the screen, otherwise, the detection points will be drawn on the image. By drawing these detection points, it can be judged whether the detection is correct or not.

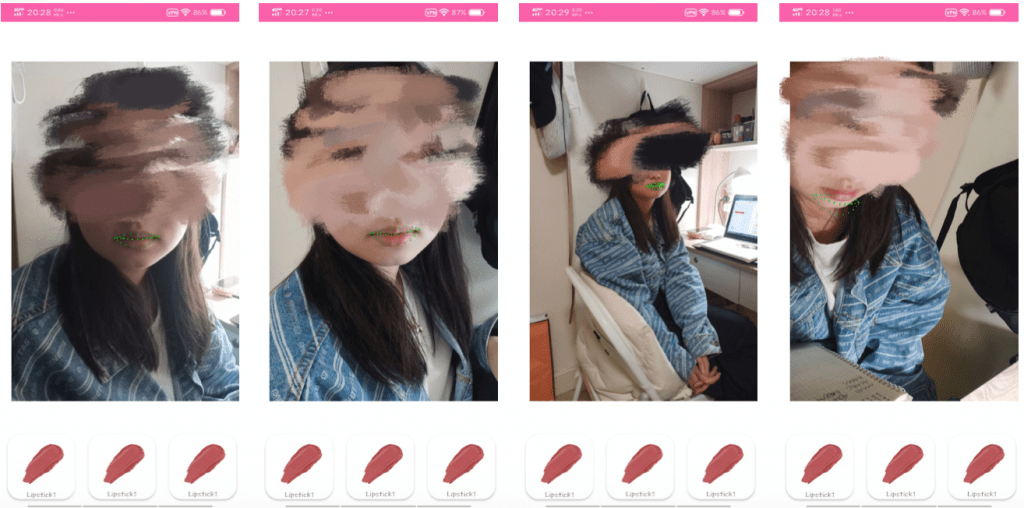

The first test was done by the emulator in the Android Studio. To be more accurate for my face detection evaluation, my second test was done with a real device. The real device I used is an Android phone, and the Android version is 9. In the real device test, I first found a photo model and photographed her at different angles, distances, and lighting. In addition, the lip image tested in the simulator was tested again in the real machine to compare the results. As an example, Figure 12. is the test result of the model case in a real device and the images are uploaded from the camera. It can be seen that face detection can work successfully in the real device and the test result is similar to the test result of the emulator. The details will be discussed in the test result section. To see and test the final output of the lipstick try-on function, it should test with the brand selection function, because the lipstick data is passed from the brand selection function.

Brand Selection Function & Lipstick Comparison Function

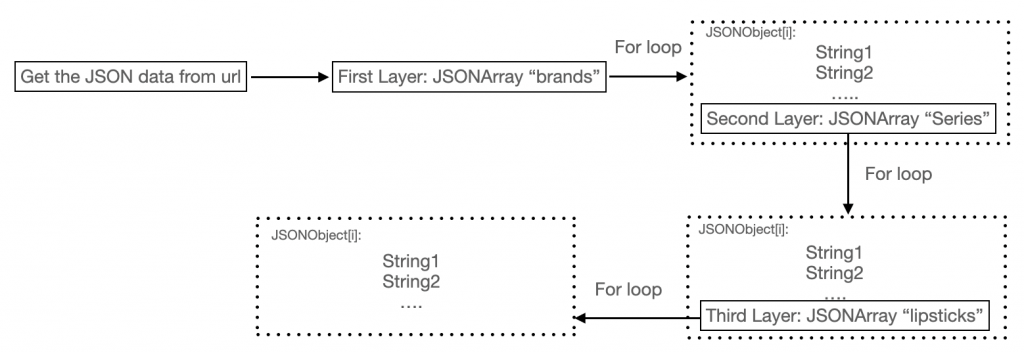

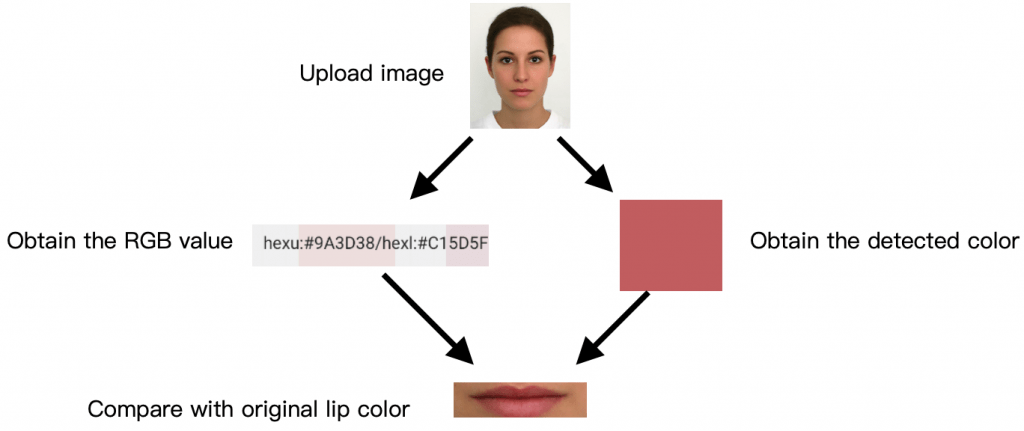

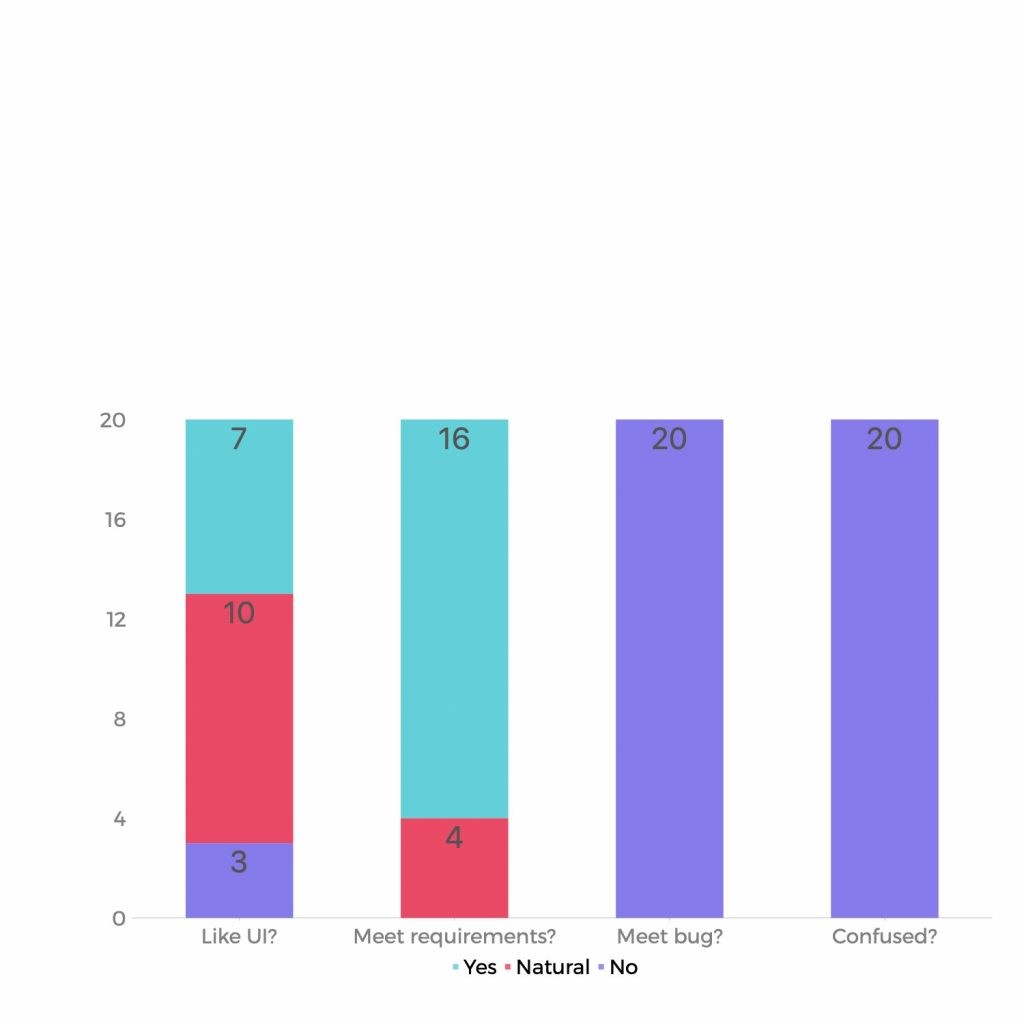

The brand selection function and lipstick comparison function were easy to be tested. Just click on the functional buttons and see the presented results. For the Brand selection function, the test objects are brand selection, series selection, lipstick selection, and the selected option's highlight effect. Firstly, pressed the different brand buttons to see whether the series list was changed correctly. And then chose a series to see whether the interface was switched to the try-on interface and showed the correct lipstick list. Lastly, selected one of the lipsticks to see whether the color was applied to the lip image and whether the color was correct. It is also a part of the test of the lipstick try-on function. After applying the lipstick color, it could see how the try-on works, for example, whether the lipstick fits the contour of the user’s lips, and whether the try-on effect is natural. Another thing that needs to be tested is the selected option’s highlight effect. It was simply tested by pressing different buttons to see whether it is highlighted or whether it changes the highlighted option. Also, Switch the interface and come back to see whether the highlighted effect is still in the right position.

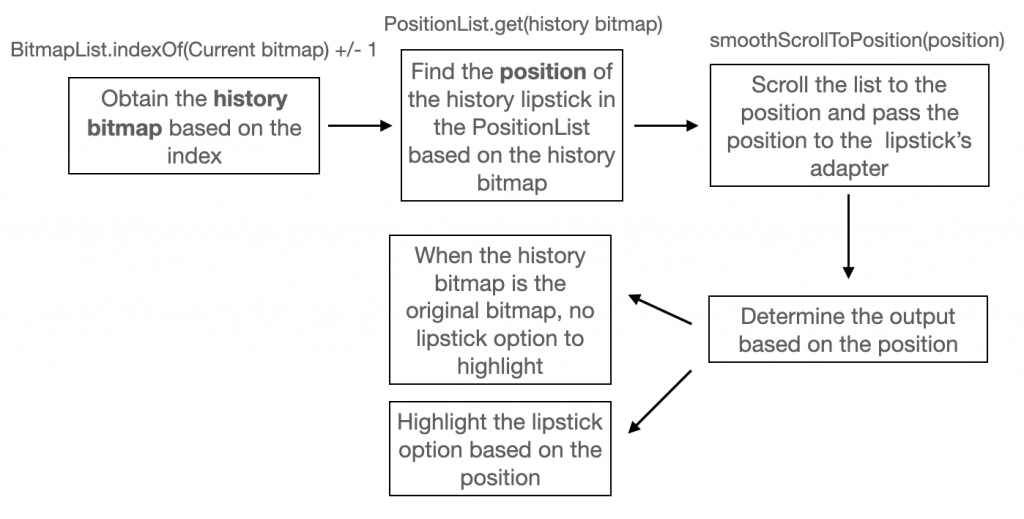

For the lipstick comparison function, its evaluation criteria mainly include whether the undo and redo function work properly, whether the lipstick list scrolls to the corresponding history option, and whether change the highlight object to the current history option when pressing the undo or redo button. Basically, after completing this part of the coding, in the subsequent development, I tested the function of brand selection and lipstick comparison every time I ran the simulator, and every run was successful without any error.